NVIDIA GeForce RTX GPUs bring unparalleled performance to PC laptops, desktops, and workstations.

Featuring specialized AI Tensor Cores capable of delivering over 1,300 trillion operations per second (TOPS), they are designed for innovative applications in gaming, content creation, and everyday productivity.

For workstations, NVIDIA RTX GPUs exceed 1,400 TOPS, offering next-level AI acceleration and efficiency.

Productivity and Creativity With AI-Powered Chatbots

Tools like ChatGPT have revolutionized user interactions with AI, transforming basic, rule-based computing into dynamic, conversational experiences.

From suggesting vacation ideas to writing emails, poetry, or code, LLM-powered chatbots have reshaped the way people engage with technology.

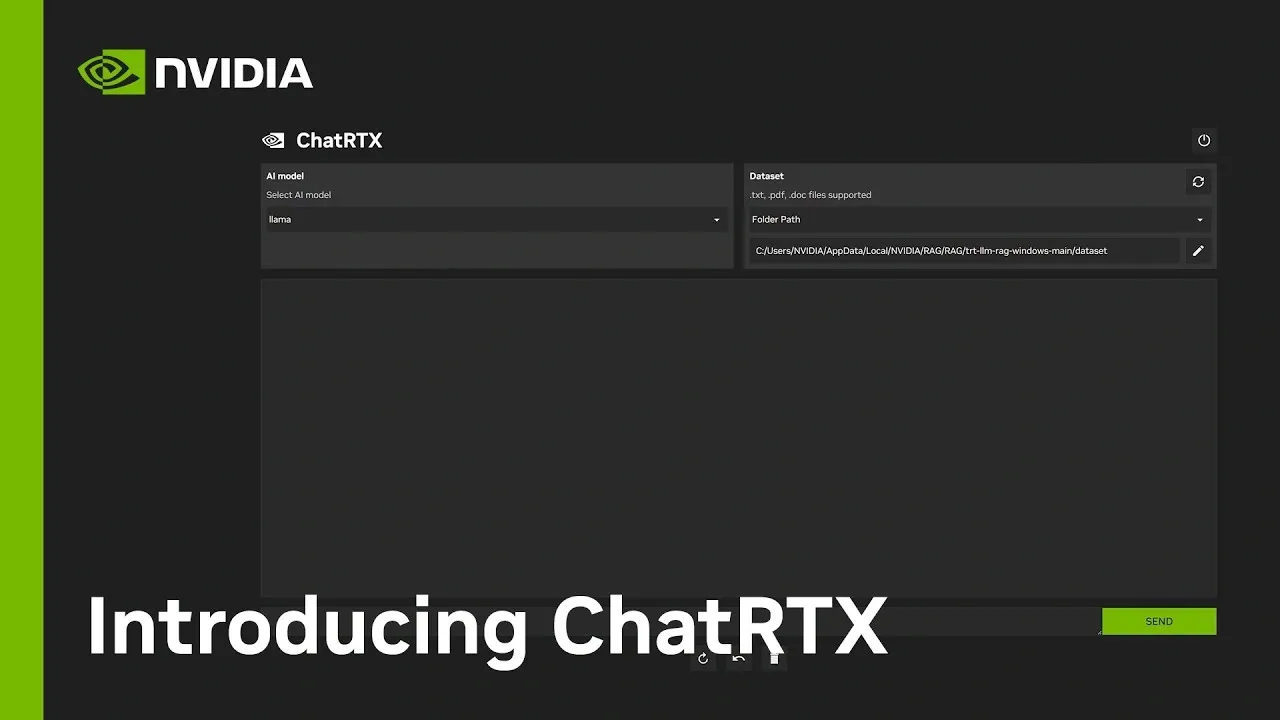

In March, NVIDIA introduced ChatRTX, a demo app that allows users to personalize a GPT LLM with their own content, including documents, notes, and images.

Leveraging retrieval-augmented generation (RAG), NVIDIA TensorRT-LLM, and RTX acceleration, ChatRTX delivers fast, private results by running locally on RTX PCs or workstations.

NVIDIA also offers a wide range of foundation models, including Gemma 2, Mistral, and Llama-3, which can run locally on NVIDIA GPUs for high-speed, secure performance without reliance on cloud services.

AI Applications With RTX Acceleration

AI is being integrated into various applications, from games and content creation tools to software development and productivity platforms.

This expansion is fueled by NVIDIA’s RTX-accelerated developer tools, software kits, and frameworks, which simplify running AI models locally in popular applications.

Brave Browser’s Leo AI, powered by NVIDIA RTX GPUs and the open-source Ollama platform, enables users to run local LLMs like Llama 3 directly on their RTX PCs or workstations.

Running LLMs locally ensures fast, responsive AI performance with full data privacy, eliminating the need for cloud services.

NVIDIA’s optimizations for tools like Ollama accelerate tasks such as summarizing articles, answering questions, and extracting insights directly within the Brave browser.

Users can toggle between local and cloud models for greater flexibility.

Brave’s blog provides simple instructions for adding local LLM support via Ollama.

Once configured, Leo AI can use locally hosted LLMs for queries, providing a seamless and secure AI experience.

Agentic AI: Solving Problems Autonomously

The next frontier in AI is Agentic AI, which uses advanced reasoning and iterative planning to tackle complex, multi-step problems autonomously.

Applications like AnythingLLM demonstrate how Agentic AI is transforming productivity and creativity.

This tool enables users to deploy AI agents for tasks such as web searches or meeting scheduling, interact with documents through intuitive interfaces, and automate intricate workflows.

Running on RTX GPUs, AnythingLLM offers fast, private, and responsive AI workflows entirely on local systems. The application supports offline functionality, leveraging local data and tools inaccessible through cloud-based solutions.

Empowering Users

The AnythingLLM Community Hub provides access to system prompts, productivity-enhancing slash commands, and tools for building specialized AI agent skills.

By enabling users to run agentic AI workflows with full privacy, AnythingLLM fosters innovation and simplifies experimentation with cutting-edge technologies.

Whether for gaming, productivity, or advanced AI experimentation, NVIDIA GeForce RTX GPUs continue to lead the way in delivering powerful, secure, and efficient AI-driven solutions.